We don't write tests. There just isn't time for luxuries.

Aug 22, 2007

Over the last few weeks and months, I've often had the displeasure of being reminded of the common opinion that writing automated tests slows down the development process. "We just didn't have time to write tests" or "Nope, we don't have any test coverage... we just didn't have time." are common expressions of this problematic trend in software development. In order to disregard something widely accepted as being a necessary practise, writing automated tests must really take forever, right? Wrong. I call this the testing-slows-us-down argument, and, frankly, I don't buy it at all. Here's why.

Everybody Tests

Everybody has to test their code. It's just that those of us who write automated tests write code to do our testing, where non-testers use humans (themselves, usually) to manually verify correct behavior. So, we can be sure that the testing-slows-us-down argument rests on the premise that manually verifying behavior is faster than writing automated tests.Investing Time

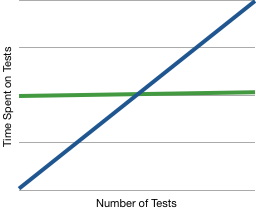

The two methods of testing distribute your time investment differently. Since automated tests run very quickly, the time investment is made in writing them. Running the tests is nearly instantaneous. With manual testing, it is the opposite. Designing the tests takes nearly zero time, whereas actually running the tests takes a measurable amount of time each time you need the test. So, with automated testing, you get most of your time investment out of the way at the outset. Once it's written, it's written. With manual tests, your time investment grows each time you test for something. In order for the testing-slows-us-down argument to remain valid, the total time investment made on manual testing must be less than the time investment required to write the automated tests.In these terms, the testing-slows-us-down argument can be expressed as follows: The time it takes to write the automated tests for a feature is greater than the total time that will be spent manually testing that feature throughout the lifetime of the project.

Since one of my goals for this article is proving the "no time to test, we need to launch our product" people wrong, I want to show that this (short-sighted) argument is as, if not more, flawed than the testing-slows-us-down argument. I'm going to phrase the product-launch argument as follows: The time it takes to write the tests for a feature is greater than the total time that will be spent manually testing that feature until product launch.

Breaking Even

If it takes twenty minutes to write your automated tests, and one minute to test your feature manually, the break even point for an automated tester is when they've run their tests twenty times. After that point, automated tests become cheaper and cheaper (per test). Manual tests continue to become increasingly expensive over their lifetime. So, the testing-slows-us-down argument can be rephrased once more, making use of our new terminology: The automated testing break even point will not be reached before product launch.

If it takes twenty minutes to write your automated tests, and one minute to test your feature manually, the break even point for an automated tester is when they've run their tests twenty times. After that point, automated tests become cheaper and cheaper (per test). Manual tests continue to become increasingly expensive over their lifetime. So, the testing-slows-us-down argument can be rephrased once more, making use of our new terminology: The automated testing break even point will not be reached before product launch.

Developers Developers Developers

Because the cost of manual testing grows considerably each time you run your tests, bringing on extra developers adds a multiplier to your (growing) test cost. Anytime any developer wants to merge their branch back in to trunk, they're going to have to take a look over the whole source tree to make sure they didn't break anything. This means, for the reasonably attentive developer, testing code that they didn't write - going over all of the features. It's the manual testing equivalent of running the whole test suite. Not only is this a time-consuming process, but it is incredibly error prone.Debugging Time!

No developer can be reasonably expected to remember, and meticulously verify all of expected functionality from an application, at each check-in. That's a superhuman expectation. You might even say it's a job better suited to a machine? Seriously, though, code is interdependent. Changes in one area can have impacts all over the application. When relying on humans to verify application behavior, a lot of bugs are going to slip through the cracks.More bugs means more time spent debugging, which, incidentally, means more time spent testing, for the manual tester. Moreover, it's not uncommon for the same bug to surface repeatedly. We've all seen it. It's called regression, and it's why us automated testers have something wonderful called regression tests.

With every bug, automated testers (like always), make a one time investment. Since automated testing is far more likely to prevent regressions than manual testing, the time benefits here are two-fold. First, once the regression test is written, it's written, and the behavior doesn't need to be repeatedly verified by hand. Second, the bug is far less likely to resurface. Manual testers may argue, here, that they are capable of adding the regression tests to their regular passes over their code, keeping the bugs out, just the same. While this may be the case for the most superhuman of individuals, the liklihood that an entire team may be capable of such incredible manual testing is very low.

So, as a general rule, I think it's safe to say that automated tests significantly reduce debugging time, by providing a much higher degree of accuracy, and acting as a powerful weapon for preventing regression.

Release Already

It seems pretty clear to me, after a thorough analysis of the testing-slows-us-down argument that its proponents are, at the least, misguided. Automated testing is at least as fast as manual testing. In writing this article, I thought a lot about why so many people have this common misconception. I think it mostly stems from one of the following.The most common cause of this misconception is likely naivete. Many of the challenges that really bring out the best in automated testing (and, consequently, the worst in manual testing) are far more evident with bigger projects. While I maintain that automated testing is at least as fast as manual testing on all projects, it's likely that bigger projects will see much bigger benefits. The problem, here, is that big projects often start out as small ones. And, unfortunately, growing pains can cause some of the most difficult problems with keeping software working properly.

My assumption is that the ones who aren't naive are just lazy. Learning how, what, and when to write automated tests can be a difficult undertaking, but it's well worth it. Like writing the tests themselves, a little bit of up-front investment in your skillset will save you loads of time, and headache later on. So, do yourself a favor, and learn to test. You'll thank yourself for it.

Update: See my first response in a new series to some of the discussion surrounding this article.